Neuroscientists Peer Into The Mind’s Eye

24:28 minutes

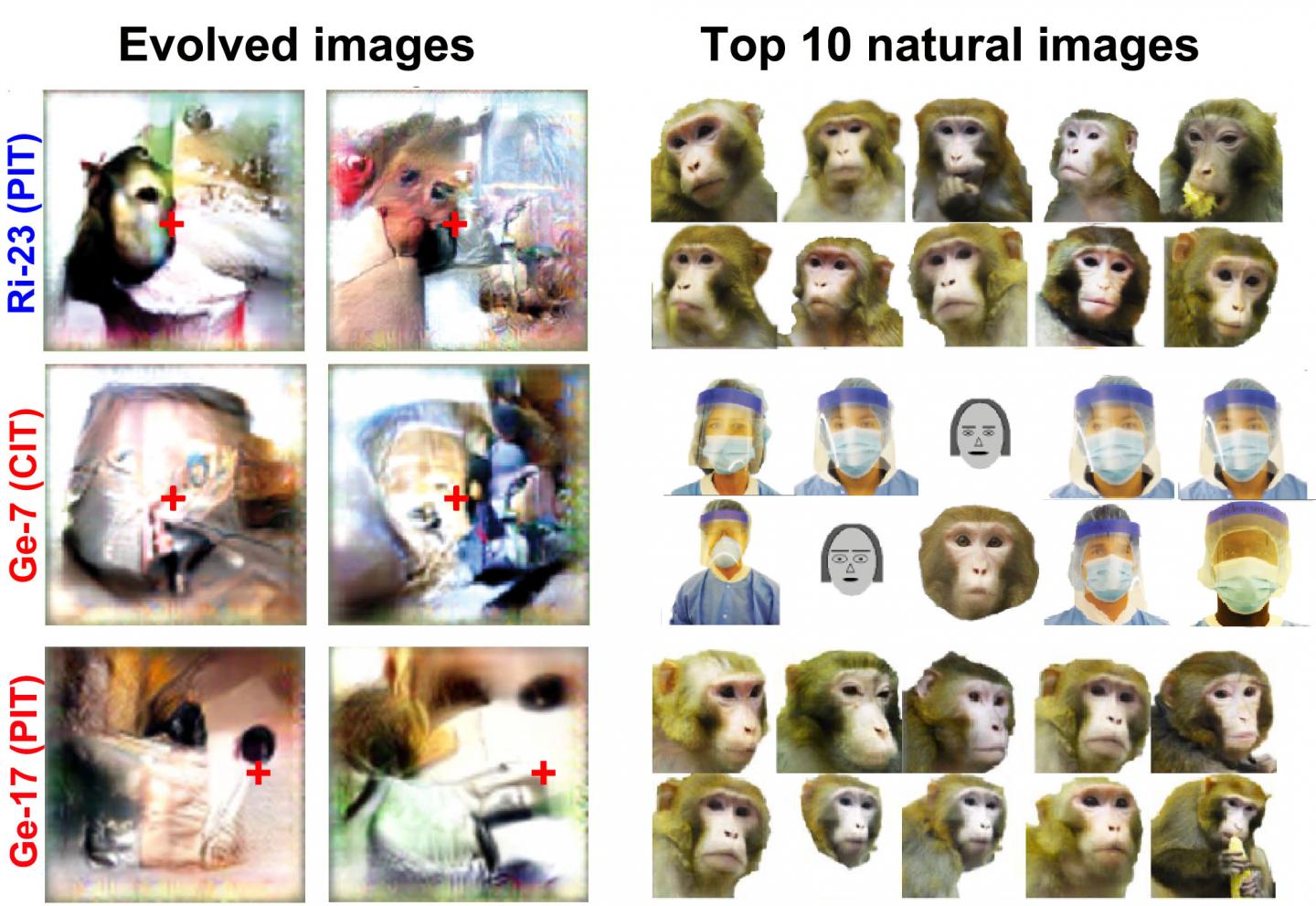

It sounds like a sci-fi plot: Hook a real brain up to artificial intelligence, and let the two talk to each other. That’s the design of a new study in the journal Cell, in which artificial intelligence networks displayed images to monkeys, and then studied how the monkey’s neurons responded to the picture. The computer network could then use that information about the brain’s responses to tweak the image, displaying a new picture that might resonate more with the monkey’s visual processing system.

“The first time we saw this happening we felt like we were communicating with a neuron in its own language, like we’d given the cell the ability to tell us something new,” says Carlos Ponce of the Washington University School of Medicine in St. Louis.

After many iterations of this dance between brain activity and image creation, the researchers were left with a set of ghostly images, “designed” in part by the what the monkey’s visual cells were encoded to respond to.

In this segment, Ponce and his collaborator Margaret Livingstone talk about what the study tells us about how the brain “sees,” and how neuroscientists are now tapping into what some artists may have already discovered about perception. See more images from the research below.

Carlos Ponce is an assistant professor in the Department of Neuroscience at the Washington University School of Medicine in St. Louis, Missouri.

Margaret Livingstone is the Takeda Professor of Neurobiology at Harvard Medical School in Boston, Massachusetts.

IRA FLATOW: It sounds like the beginning of a science fiction movie. Hook artificial intelligence up to a real brain. Let the two talk to each other and see what happens. That real setup did allow neuroscientists to probe the way a primate’s visual system works, essentially allowing a monkey’s brain to guide the design of new pictures. And the brain painted complex hallucinations of different colors and shapes and fragments of faces and eyes. And you can see samples up on our website at ScienceFriday.com/visualbrain.

So what do these dreamlike pictures tell us about the brain? Well, here to tell us is Carlos Ponce. He’s the lead author of that work out this week in the journal, Cell. He’s assistant professor in the Department of Neuroscience at Washington University’s School of Medicine right there in St. Louis. Welcome to Science Friday, Dr. Ponce.

CARLOS PONCE: Thank you for having me.

IRA FLATOW: Let’s back up for a second and talk first about how the brain sees. There are brain cells that respond to the face, right?

CARLOS PONCE: That is correct. So the primate brain– that’s the brain of monkeys and humans– has a region towards the back of the head in the visual cortex, right about behind your ears, called the inferior temporal cortex. And this part contains neurons that will respond to complex images like faces or hands or places.

And we know that these neurons are very special because, if they malfunction, people can lose the ability to recognize objects. I mean, a famous example is that of Oliver Sacks’s famous patient who mistook his wife for a hat. So the bet is that if we can understand these neurons, we can solve the problem of facial recognition as a whole.

IRA FLATOW: OK, so let’s– walk me through please what you did. You hooked up a monkey’s brain with electrodes.

CARLOS PONCE: Yes.

IRA FLATOW: And then follow it from there because that hardly describes what you did.

CARLOS PONCE: [LAUGHS] Well, yeah, so let me back up a little bit and kind of describe to you what we knew so far about this part of the brain. And what we found is that about 50 years ago, other folks had discovered that these cells existed by training animals to look at objects that were just laying around the lab while recording electrical impulses from the neurons in their brains. And this is how, for example, Charlie Gross discovered the existence of face cells and hand cells. These were neurons that responded most to pictures of faces and hands. And over the decades, we’ve continued to study cells in this exact same way, where, basically, the modern version is that we download images from the internet corresponding to broad categories defined by humans and then measure how cells respond to these categories.

And the problem is that, in virtually all of these experiments, we, the humans, the scientists, we choose what images to show the animal and the cells. And so in a way, there is no way to guarantee that a given stimulus set that we’ve chosen is going to contain the neurons’ preferred object because there is a practical infinity of images that we could choose. And this is the kind of problem that we were stuck in for decades until the machine learning community gave us a path forward.

IRA FLATOW: And describe what you did then please.

CARLOS PONCE: So there, what we did is we noticed that researchers in machine learning, who also care about visual recognition, had created a new kind of model. And these models are called generative adversarial networks, which I’m just going to call generators right now for simplicity. And what these generators do is that they learn to abstract distributions of objects in the world, for example, human speech or images of the natural world.

And so then what happens, when fully trained, is that one can input numbers into the generator to recreate images in the world or even create images of objects that don’t exist. It’s kind of semi-real objects.

So our co-authors, Will Xiao and Gabriel Kreiman, identify one of these generators that had been trained by folks at the University of Wyoming, Anh Nguyen and Jeff Clune. And Will developed a special algorithm called a genetic algorithm that would be able to take the responses of neurons in the brain and turn them into inputs for the generator. And he called this extreme.

IRA FLATOW: All right, let me just remind everybody so we have to pay the bills. This is Science Friday from WNYC Studios. I didn’t mean to interrupt you because we’re coming to a break. But let me see if I can summarize what you’re saying ’cause we’re getting a little bit into jargon that I’m afraid our audience might not be able to follow. So let me see if I can sum it up. And tell me if I’m right.

You’re connecting a machine learning network to a monkey’s brain. And it’s showing pictures to the monkey. And then you’re seeing how the brain responds and then making new pictures and doing it all over again until you get to the point where the brain says, aha, that is what I was thinking of. How close am I?

CARLOS PONCE: That is exactly right. We found a way for these neurons to suddenly– to be able to express to us the kind of image that they’re encoding independent of our choices. And what we found was quite surprising. We found that these neurons were producing images that resembled objects in the world but, in fact, we’re not objects in the world.

So if I can describe one particular experiment.

IRA FLATOW: Yeah.

CARLOS PONCE: So picture this. This is one of the early experiments we did. The monkey is sitting inside a little room. It’s watching images while he drinks juice from a little straw. And his job is just to look at the screen. In the meantime, we’re collecting electrical impulses from the neurons as the generator presents just black and white pictures.

And when you show them really quickly over time– we’re just listening to the first– the initial components of the responses of the cell. This looks like a bit of a haze, you know? It’s just like a moving haze in front of you.

And suddenly, when we’re doing these experiments, we found that, as we kept staring at these pictures, the neuron began to increase its firing rate in response to the images in such a way that the images began to take a specific form. And it looked like something started staring back at us. It was– it felt like it was eyes that started to appear in the image. They were not eyes, though. They were just black spots. But they stayed there.

And then they began to evolve into something more that looked like a face. It was like semi-circles around in the eyes, a few lines underneath, but much more abstract. However, while this is happening, the cell is firing action potentials in rates that are way above and beyond what we see them do in response to natural images. So we knew that these cells were telling us something real, something that they had learned about the world, and now, it was their chance to tell us what these things that they had learned were.

IRA FLATOW: So the monkey was sort of telling you what it was seeing.

CARLOS PONCE: Well, a cell in a monkey’s brain. So at this point, there are other parts of the brain that are more likely related to what the actual monkeys think. And so this is more machine-like part of the brain that helps provide information to give rise to thoughts and memory.

IRA FLATOW: And we have samples of these images up at our ScienceFriday.com/visualbrain site, ScienceFriday.com/visualbrain. And the images you get there look surreal, impressionistic, almost like artistic abstractions.

We’re going to take a break. And when we come back, I want to ask you about these images and about the abstractions. So stay with us, OK? Dr. Ponce, we’ll be right back.

CARLOS PONCE: Sounds–

IRA FLATOW: We’re also going to bring on Margaret Livingstone, a professor of neurobiology at Harvard Medical School, who will talk about other research that deals with imaging and the brain. Stay with us. We’ll be right back after this break.

This is Science Friday. . I’m Ira Flatow. If you’re just joining us, we’re talking about new investigations of the brain’s visual system, enabled by hooking artificial intelligence networks up to a living brain with my guest Carlos Ponce, Assistant Professor in the Department of Neuroscience at the Washington University School of Medicine in St. Louis. If you have any questions, our number, 844-724-8255, 844-724-8255. Or you can tweet us at @SciFri.

I’d like to bring on another guest now who also contributed to this study in the journal Cell. And that is Margaret Livingstone. She is the Takeda Professor of Neurobiology at Harvard Med School in Boston. Welcome to Science Friday, Dr. Livingstone.

MARGARET LIVINGSTONE: Thank you. Hi. Hi, Carlos.

CARLOS PONCE: Hello.

IRA FLATOW: I take it you know each other.

MARGARET LIVINGSTONE: Hi.

[LAUGHTER]

CARLOS PONCE: Marge taught me everything I know.

MARGARET LIVINGSTONE: We’ve worked together for years.

IRA FLATOW: We always bring people on who know each other.

Dr. Livingstone, you’re interested in art and the brain, correct? And some of those images are interesting in that they almost look like caricatures or Cubist renderings. Tell us about that.

MARGARET LIVINGSTONE: Well, so as Carlos said, the images that evolved from various neurons that we recorded from often looked sort of like a face or sort of like somebody we knew but almost like a caricature. And earlier work that we– so they were, like, leprechauny, or kind of gnomelike.

And we’d known from previous work that cells that are selected for faces are often most selective for faces that are extremes, caricatures, really big eyes and just faces, or real big faces on tiny bodies. And this is something that you see in art a lot too. And I think it reflects the way the brain codes things.

So the brain doesn’t have neurons for everything you see in the world. Instead, it tends– because then you’d have to have way too many neurons, right? Instead, it codes how things are distinct, how they differ from everything else.

IRA FLATOW: That is interesting. So those artists sort of were tapping into something in their brains about how they saw the world.

MARGARET LIVINGSTONE: Absolutely. That’s why I’m interested in art, not because I’m fundamentally interested in art. I’m interested in it because artists are really smart and they figure stuff out.

IRA FLATOW: And what did they figure– and what exactly did they figure out in these cases?

MARGARET LIVINGSTONE: Well, they figured out that caricatures are a better way of recognizing somebody than a vertical drawing. It’s easier to recognize a caricature of somebody than a line drawing.

IRA FLATOW: That’s quite interesting.

MARGARET LIVINGSTONE: It evokes who they are.

IRA FLATOW: And so they actually then just write those– draw those kinds of figures that reflect how we view them and–

MARGARET LIVINGSTONE: Yes. Yes. And lots of kinds of art do that. They figure out stuff about how our brains work. They don’t put it in neurobiological terms. But they figure out stuff that works. And it works because of the way our brains work.

IRA FLATOW: So should guys like Carlos be talking to artists more in their work?

MARGARET LIVINGSTONE: I do. I do.

CARLOS PONCE: I happened to marry one.

IRA FLATOW: [LAUGHS]

MARGARET LIVINGSTONE: Oh, yeah, there you go.

IRA FLATOW: For that reason?

CARLOS PONCE: Exactly. So I could– we can enrich each other’s academic life.

IRA FLATOW: Well, now, amplify on what Margaret was saying. Do you really– you know, you believe what she’s saying to be true.

CARLOS PONCE: Oh, absolutely. You know, and I can easily describe another night, one of the early nights that we started in some of these evolutions. And we found that this monkey’s cell gave rise to what I found to be a particularly unsettling image. And it was another image that looked like a face, but it had a big, big red eye where the regular eyes should be.

And I remembered that it affected me in a way that I often think art does. It felt like it had crossed into some kind of uncanny valley, something– it was revealing something about the way that I thought about faces. I showed that to my wife that very night. And that’s when I realized that there was something interesting happening.

IRA FLATOW: Interesting. So do you have any feeling about why the brain would have evolved this way, not to see a tree or a cat or a face, but to see these weird abstractions?

MARGARET LIVINGSTONE: Because it’s a population code. And if you want to code– so face cells, one of the things they care about is how far apart the eyes are, right? That’s just one of the things. That’s an observation. So you could either have a dozen cells that code for particular, plausible, primate inner eye distances. Or you could have two cells, one that codes for big inner eye distances and one that codes for small, and you get everything in between for free from the ratio of the two. So it’s an efficient way of coding things.

IRA FLATOW: You know, we see animals that have big spots on them. I’m thinking of, like, butterflies or other animals that might be prey to bigger animals. And that’s because the eyes– and they make them look like they have eyes so that maybe, you know, watch out if you come to eat me. It’s amazing–

MARGARET LIVINGSTONE: Absolutely.

IRA FLATOW: –you’re both talking about eyes here, how important they are.

MARGARET LIVINGSTONE: Eyes are very important to face cells.

CARLOS PONCE: Yes.

MARGARET LIVINGSTONE: Some of our cells just evolved a pink round thing with two big black spots.

IRA FLATOW: Wow. I mean, you know, you’re right. If our brains had to code for every single thing they might encounter, there wouldn’t be enough room in there.

MARGARET LIVINGSTONE: Yeah, some of them look like The Scream, right? I mean, they really did look–

IRA FLATOW: That’s interesting.

MARGARET LIVINGSTONE: –creepy.

IRA FLATOW: Yeah, that is creepy.

MARGARET LIVINGSTONE: So when people– there’s a syndrome people, humans can get, people who have low level vision loss in their central vision.

IRA FLATOW: Right.

CARLOS PONCE: And they will occasionally hallucinate faces floating around. But they’re not normal faces. They’re leprechauny or gnomey. They have big eyes and they look funny, gargoyles. So I think that’s cells in their brain that aren’t getting any input just firing because they got– they needed to.

IRA FLATOW: Hmm. Go ahead, Carlos.

CARLOS PONCE: Oh, and I was going to say, the other thing that is interesting about this– I mean, we are talking about faces a lot. But a lot of these cells also gave rise to patterns and interactions of color and texture and shape for which we really have no word. It was as if– and you know, that’s a little bit more reminiscent of the kind of abstract art that a lot of artists can also come up with, things that defy, or rather, bypass our ability to call them anything.

And I think that gets us closer to another aspect that’s important about the brain. And that’s the idea of the visual vocabulary, the fact that the world has certain kinds of shapes that happen to occur commonly in certain categories. And brains are smart enough to know how to abstract them as well.

IRA FLATOW: Is there anything special– why do you work with monkeys? Is there anything special about how monkeys see, Margaret?

MARGARET LIVINGSTONE: They see just like us. Old world monkeys have a visual system just like us.

IRA FLATOW: What do you mean by that? What would not be just like us? you mean they see in full color like we do?

MARGARET LIVINGSTONE: Yeah, trichromacy.

IRA FLATOW: Mm-hmm.

MARGARET LIVINGSTONE: So all right, let’s talk about color vision. You have only three cone types. And only old world monkeys, among mammals, have three cone types, except for ground squirrels, I think. Yet you see millions of colors. And the way you do this is by having one cell that responds really well to long and is inhibited by short and then another that’s the reverse. So you just got these two opposite extremes, and you get everything in between that codes those millions of colors that you see.

IRA FLATOW: Do we have to learn how to see then starting out as a baby?

MARGARET LIVINGSTONE: Absolutely.

IRA FLATOW: Yes.

MARGARET LIVINGSTONE: Well, your visual system has a few simple rules that it uses to wire– your whole brain, not just your– we study vision because it’s a model for the rest of the brain that we can manipulate by showing things, right? So the whole brain wires itself up using a few simple rules about neurons that fire together should stay connected. And then after birth, these same rules allow you to learn to see what you encounter. Because the brain’s just a statistical learning machine. Whatever it sees it gets good at recognizing and discriminating.

IRA FLATOW: Do we come– you talk about these caricatures of faces with the big eyes. Are babies born to recognize those sorts of big eye faces?

MARGARET LIVINGSTONE: It’s disputed. We think not. We think that you need actually to see faces in order learn to recognize them.

IRA FLATOW: So a newborn baby is not interested in your face until it learns it’s your face.

MARGARET LIVINGSTONE: They’re interested in tracking small, round, dark things, especially in the upper visual field. So that does include faces, especially things that move. So I think it’s much lower level than an actual face template. But this is highly disputed in our field.

CARLOS PONCE: However, I would add that our results do provide evidence for the idea that the brain is very good at learning very quickly. And to give you an example, let me tell you about a monkey named George. And this is some experiments that were conducted by our group as well. Peter Slade, in March, did some of these experiments.

This animal had arrived to the labs when he was an infant. And so this animal had been exposed to humans wearing blue protective equipment and surgical masks. And what we found, when we studied the cells in this monkey–

MARGARET LIVINGSTONE: Because that’s what you have to wear in the monkey room.

CARLOS PONCE: –is that some of the cells– that’s what we have to wear in the monkey room to see them. And what we found is that some of the cells that this monkey had gave rise to images that contained those exact same features. So that’s something that natural selection could not have selected for. Rather, it provides evidence that natural selection gave rise to a very good learning mechanism that babies and infants have to learn about the world.

IRA FLATOW: Interesting. Let me go to the phones. [INAUDIBLE] in Phoenix. Hi, welcome to Science Friday.

SPEAKER: Thank you.

IRA FLATOW: Go ahead.

SPEAKER: My question is, do the images that you see resemble what people see typically in their dreams?

IRA FLATOW: Hmm.

MARGARET LIVINGSTONE: I think so. I think dreams and low level vision loss give you the same thing of cells firing off randomly that show you unreal things, surreal things.

IRA FLATOW: Interesting. A fan tweets in, “Will we be ever able to record our dreams from brain signals for later viewing?”

MARGARET LIVINGSTONE: [LAUGHS]

CARLOS PONCE: I believe that’s–

MARGARET LIVINGSTONE: But why do you need to do that? You got your own dreams. You see them when you–

IRA FLATOW: But if you want to share them.

CARLOS PONCE: [LAUGHS]

MARGARET LIVINGSTONE: Oh.

CARLOS PONCE: I actually believe–

MARGARET LIVINGSTONE: No, because it’s a population code. You’d have to record from every neuron in the brain.

CARLOS PONCE: But I would like to offer that this method that we’ve developed could, in principle, be applied to any kind of sensory neuron or any kind of neuron that encodes information about vision. And so, certainly, if we started looking at regions that encode work in memories, it’s quite plausible that we’d be able to see something.

IRA FLATOW: Hmm. Let me just take a hmm break on Science Friday from WNYC Studios.

So you think that it might be possible, Carlos, to do that, because you already get an image that sort of– it’s sort of coming more into focus over time, correct, from the monkey’s cells?

CARLOS PONCE: Yes, I mean, we happen to study a visual area–

MARGARET LIVINGSTONE: It merges.

CARLOS PONCE: Yes. And we’re studying a visual area that is well-defined, that is also pretty sensory-based. But as one advances in the brain towards the more anterior parts of the brain, one begins to find neurons, like in the prefrontal cortex, that will respond to visual images, but also to other kinds of cognitive processes, thoughts, if you will. And certainly, we’re curious to know if we could apply this technology, this artificial intelligence models, to these parts of the brain. Would we extract something that more or less represents what an animal might be thinking of?

IRA FLATOW: And you were recording activity from neurons in this study in an animal. But could you do something similar non-invasively, like using fMRI in people?

CARLOS PONCE: I– well, I would offer that not with current technology. Because it does take a lot of image presentations to obtain the results that we have. And fMRI moves a little slower. But I’ll let Marge comment on that a little more.

MARGARET LIVINGSTONE: You might be able to. I mean, what we’re approaching now is the ability to use not just one neuron, but a population of neurons that you could record, let’s say, in an epilepsy patient. But fMRI is even coarser than that. So you might be able to get something out of it. But it wouldn’t be terribly informative any more than– eh, maybe it would.

IRA FLATOW: How do you know?

MARGARET LIVINGSTONE: Big, thousands of neurons at once is all you can get with fMRI.

IRA FLATOW: [INAUDIBLE]

MARGARET LIVINGSTONE: So all those thousands of neurons would– all you’d get is what they have in common, not what each of them is doing.

IRA FLATOW: So you just need a better machine is what you need.

MARGARET LIVINGSTONE: Oh, yeah, you come up with a better machine, we’ll use it.

[LAUGHTER]

IRA FLATOW: I’m going to leave the conversation there because– I really wish I could. I have the blank check question, but I don’t have the machine.

Margaret Livingstone is a Takeda Professor of Neurobiology at Harvard Med School in Boston. And Carlos Ponce, Assistant Professor in the Department of Neuroscience at the Washington University School of Medicine in St. Louis. Thank you both for taking time to be with us today.

CARLOS PONCE: Thank you.

MARGARET LIVINGSTONE: Sure.

IRA FLATOW: And I want to say again, we have samples of those images up on our website at ScienceFriday.com/visualbrain.

And that’s about all the time we have for this segment. One last thing before we go. You may recall a few years ago reports of a new kind of celestial phenomena featuring a greenish striped glow, a mauve, purplish arc in the sky. Amateur astronomers and aurora geeks weren’t quite sure what to make of it. So they called it Steve.

Now, a group of researchers say they may have a line on what causes Steve. They coordinated a series of satellites to observe how energy was flowing in space and down towards the Earth when Steve appeared.

TOSHI NISHIMURA: There are two different types of energy flow coming in different ways into the upper atmosphere of the Earth. What’s first happening is a huge energy release deep in space. And then high energy particles come really close to the Earth, creating a jet stream of plasma, which create the mauve color of the arc.

On the other hand, a green arc is caused by a disturbance of high energy particles directly in the upper atmosspheres. In that sense, the green emission is created in the same way as the regular aura. But the mauve arc is very different.

IRA FLATOW: That’s a Toshi Nishimura of Boston University, one of the authors of a paper on Steve published in the journal Geophysical Research Letters. So he says the mauve arc glow works in somewhat the same way an incandescent light bulb does, from the heating of charged particles higher up in the atmosphere. Researchers want to figure out exactly which kinds of particles are making that purple glow. The answer could be useful for helping predict space weather, including problems with radio reception. The researchers are still working on Steve too. So whatever Steve is, it’s not just a pretty sight.

Copyright © 2019 Science Friday Initiative. All rights reserved. Science Friday transcripts are produced on a tight deadline by 3Play Media. Fidelity to the original aired/published audio or video file might vary, and text might be updated or amended in the future. For the authoritative record of Science Friday’s programming, please visit the original aired/published recording. For terms of use and more information, visit our policies pages at http://www.sciencefriday.com/about/policies/

Christopher Intagliata was Science Friday’s senior producer. He once served as a prop in an optical illusion and speaks passable Ira Flatowese.

Ira Flatow is the founder and host of Science Friday. His green thumb has revived many an office plant at death’s door.