Computer Hacks of the Future, and How to Prevent Them

31:55 minutes

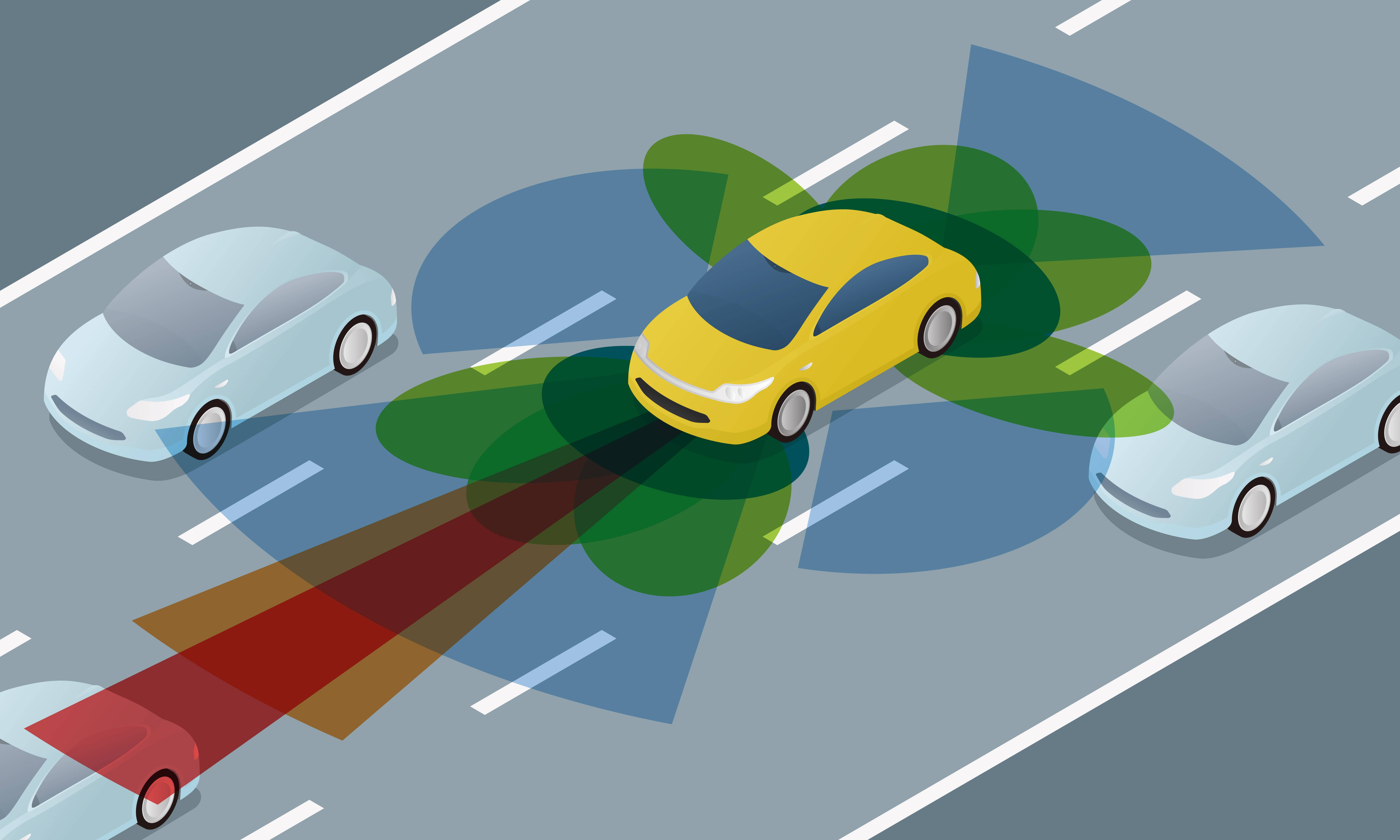

From home assistants like the Amazon Echo to Google’s self-driving cars, artificial intelligence is slowly creeping into our lives. These new technologies could be enormously beneficial, but they also offer hackers unique opportunities to harm us. For instance, a self-driving car isn’t just a robot—it’s also an internet-connected device, and may even have a cell phone number.

So how can automakers make sure their vehicles are as hack-proof as possible, and who will ensure that security happens? And as the behavior of artificially intelligent systems grows increasingly sophisticated, how will we even know if our cars and personal assistants are behaving as programmed, or if they’ve been compromised?

So how can automakers make sure their vehicles are as hack-proof as possible, and who will ensure that security happens? And as the behavior of artificially intelligent systems grows increasingly sophisticated, how will we even know if our cars and personal assistants are behaving as programmed, or if they’ve been compromised?

Some of the top thinkers in artificial intelligence and computer science will gather this weekend at Arizona State University’s Origins Project Great Debate to ponder those questions, and many more, about the potential challenges of an A.I.-dominated future, and the unique threats it poses to democracy, healthcare and military systems, and beyond.

For Science Friday’s take, Ira discusses the cybersecurity challenges of an A.I. world with a panel of computer scientists:

They debate the next generation of security risks and how to safeguard the A.I. technology we increasingly rely on.

Kathleen Fisher was the founding program manager for High-Assurance Cyber Military Systems (HACMS) at DARPA. She’s now a professor and Department Chair of Computer Science at Tufts University in Medford, Massachusetts.

Eric Horvitz is a technical fellow and director of the Microsoft Research Lab in Redmond, Washington.

Subbarao “Rao” Kambhampati is a professor of Computer Science and Engineering at Arizona State University and the president of the Association for the Advancement of Artificial Intelligence. He’s based in Phoenix, Arizona.

IRA FLATOW: This is “Science Friday.” I’m Ira Flatow. Self-driving cars, well, they’re almost here already. Digital assistants like Amazon’s Alexa are growing in popularity. The military is testing drones that could operate independently of human operators. How much of our lives might soon depend on artificial intelligence?

At Arizona State University this weekend, some of the top minds in computer science and artificial intelligence are gathering for the annual Origins Project Great Debates. They’ll explore the challenges AI could present in financial markets, health care, national security, and even our democracy. For the “Science Friday” take, we borrowed a few of them to focus on one question that often has me wondering. How secure will our self-driving cars, our digital assistants, and even military systems be from the hackers, hijackers, and others who mean harm? For example, could someone exploit computer vision to make my self-driving car see instead of a stop sign, see a yield sign. Or how do we make sure that military AI doesn’t make combat decisions with the wrong information? And how would we know if something was amiss anyway? That’s what we’ll be talking about this hour.

If you’d like to join in, our number’s 844-724-8255. You can also tweet us at @SciFi.

Let me introduce my guest. Kathleen Fisher is the department chair and professor of computer science at Tufts University. She’s also a founding program manager for high-assurance military cyber systems at Darpa. Welcome to “Science Friday.”

KATHLEEN FISHER: Welcome.

IRA FLATOW: You’re welcome. Eric Horvitz is a technical fellow and director at Microsoft Research Lab in Seattle and one of the organizers of The Origins Project Great Debate this weekend. Welcome to “Science Friday.”

ERIC HORVITZ: It’s great to be here.

IRA FLATOW: Subbarao Kambhampati is a computer scientist and engineering professor at Arizona State University in Phoenix and president of the Association for the Advancement of Artificial Intelligence. And they’re all joining us from the studios of KJZZ in Phoenix. We want to hear from you. What do you think? Our number 844-724-8255. You can also tweet us at @SciFri.

Kathleen, before we get too deeply into our conversation about artificial intelligence, let’s talk a bit about how hackable our connected devices, our cars, and so on are today. For example, hackers hijacked our DVRs and cameras that crashed many popular websites last October, didn’t they?

KATHLEEN FISHER: Indeed. Yeah, our cars and other internet of things kind of devices are actually pretty hackable at the moment. So researchers have shown a variety of ways, of, for example, being able to hack into a typical modern automobile and rewrite all the software on the platform to make it so that the car does what the hackers want it to do instead of what the original manufacturer wanted it to do. There are about five different ways hackers can reach a car from outside through the Bluetooth interface that lets you talk to the car without your hands, for example, or through the telematics unit that arranges to call for an ambulance if you’re in an accident. Those are two different ways in which a hacker can break in. And once a hacker has broken in, they can change the software that controls how the cars brake or accelerate or steer or do lots of other functionality. Basically, cars are computers on wheels, networked computers on wheels, and are quite vulnerable to remote attack.

IRA FLATOW: That’s amazing. Eric, part of the excitement of AI is it brings us new capabilities. For example, we’re speaking about cars It allows machines to drive a car. Talk to us. They do talk to us too. But it’s also opening up new vulnerabilities of the sort Kathleen mentioned, does it not? I mean, there are things, unintended consequences as we like to say.

ERIC HORVITZ: Right, so AI promises fabulous value to humanity with contributions in health care and education and driving our cars and helping us to make progress in scientific fields. But when we build systems that are smarter, that can do more for us using machine vision, machine learning to understand situations, automatic decision-making like steering on a road, we introduce what I refer to as new attack surfaces, new places that AI and other systems and even human hackers could actually attack and inject new kinds of code, new kinds of imagery.

There’s a fabulous piece of work that just came out on using AI itself as the attacker of these components. And this one example that’s fabulous came out of Berkeley, where a stop sign was analyzed and transformed into another stop sign. Human beings look at these two signs and they see exactly the same thing. But the smart AI system understood the pipeline, the machine vision pipeline, used by the car and could say with assurity that this was being viewed by the car as a big bright yield sign.

IRA FLATOW: And so they’re purposely made octagonal, right, so we know that’s the shape of a stop sign. And yield sign is a triangle. But the hacking made that confuse the computer on the car.

ERIC HORVITZ: Right, they are new kinds of attacks. They’re even called a new kind of name– machine learning attacks. How do you actually control the actual stream of data coming into a system and understand in a devious, smart way how to make the system do things that the designers did not want the system to do like the car to do the wrong thing.

The other challenge for cybersecurity is the human element. They say people are sometimes the weakest link in the security of systems. And in some, I would say, some spine-tingling work going on, there’s been work to show how you could do social engineering, so to make people– and there’s AI systems now being applied to get people to click on links by looking at large bodies of data– for example, lots of tweets– and understanding how to give an AI system the ability to write tweets that senior people at companies would feel compelled to click on.

IRA FLATOW: Stop me. I can’t help myself.

[LAUGHTER]

IRA FLATOW: Rao, let’s talk a bit about my personal digital assistant, for example, the stuff I carry around in my pocket, on my cell phone, whatever. Not on the internet, but in person, could they be hacked also?

SUBBARAO KAMBHAMPATI: Well, I think first of all, the reason your cell phone is useful right now is it’s all connected. And so, in fact, some of the same attack interfaces are actually present there too. One of the interesting questions I think is related to the point Eric was making is just like you can do image spoofing, you can do wire spoofing. So you can come to my home, and you would be talking to me, and I wouldn’t see anything wrong. And yet, you would be sending this info, sounds to my Alexa, commanding it to do all sorts of interesting things.

All of these, as worrisome as they sounds– and I’m reminded of these beautiful lyrics by Ani DiFranco that “every tool is a weapon,” and the more beautiful, the more effective the tool is, the more interesting a weapon it can become essentially. So the AI technology allows your programs to have the abilities to perceive, plan, and learn. And so you can now use that against them. And so that does open up interesting ways in which they can be compromised.

But as I think Eric was pointed out earlier too, AI, if it’s part of the problem, it is also a huge part of the solution. In fact, for even normal cybersecurity, many of the techniques that are being used to essentially fight off attackers come from game theoretic techniques in the AI, including some of the work that we’re doing on moving target defense systems, which basically, the AI techniques can do a much better job than the simple techniques that people can use. So it’s very much a part of the solution there.

IRA FLATOW: So did I hear you saying that the AI systems can mimic my voice? I mean, we use those for identifying ourselves. So somebody can figure out a way for my voice? Not that anybody would want my voice, but–

SUBBARAO KAMBHAMPATI: So one of the very interesting questions, I think, that we are trying to understand in these AI systems is when a system sees, as well as hears, is it exactly doing it the same way as humans do. One of the examples that I think Eric was earlier mentioning about the stop signs, in fact, after changing the stop sign, people still see it as a stop sign. People still might see it as a school bus. And yet, the system might see it as an ostrich. So we are in this beginnings of the understanding of machine vision and machine perception where when they work, they work almost like us, but when they fail, their failure modes are quite different from the way our failure modes are. And that can lead to interesting ways of, of course, failure, of course, as well as being hijacked. You can hijack them.

ERIC HORVITZ: I was also going to say, Ira, I think we can all assume that within a few years, AI systems and advanced graphics and rendering will be able to really spoof in high fidelity ways your identity, even videos of you talking and saying things, videos of leaders. I’ll be showing some examples tomorrow night in the public session of some very compelling technologies that show how this can be done.

And the reason we’re getting together is to really flesh out some of these adverse AI outcomes because that’s the way you can solve them. And as Rao pointed out a second ago, AI is also part of the solution. We think that not just AI, but mechanism design of various kinds, new kinds of proactive work to address all these challenges, that’s why we’re here. We’re here because we think not just to call out problems, but to come up with solutions in advance of them becoming big problems for everybody.

IRA FLATOW: Kathleen, what are the most vulnerable things now, do you think? I mean, because the cars are really not here yet. Stuff that’s here.

KATHLEEN FISHER: Well, the self-driving cars aren’t here yet, but the cars that are connected to the outside world via networks are already here. And those cars already are under control– I mean, they already control all the features of the car that a hacker might need to order to take over the car. So it’s already possible for a hacker to remotely break into a car that’s on the highway and take over, make the car acceleration not work, maybe the car braking. In fact, it happened last summer. There was a “Wired” magazine reporter who was in a Jeep Cherokee, barreling down the highway, when two white hat hackers broke in to take over. They disabled the transmission so the car basically stopped on the highway. Luckily, nobody was hurt. But that technology already exists.

There’s lots of internet of things kinds of devices that are essentially built without any security concerns in mind. Right? People are excited about the functionality and the services that these new devices are providing and rush to get products out there. And we don’t have a very good way of ensuring that these devices are built to good security standards. It’s very hard to measure security. And it’s very hard to monetize security. The models for who pays for security, and would consumers pay the premium for devices that are more secure, is really unclear. So the result is that there’s actually a vast number of internet of things devices that are connected and insecure. And we see on a regular basis reports from the hacking community of this kind of device– your refrigerator was hacked. Your television was hacked. Your pacemaker was hacked. Your insulin pump was hacked. Your car was hacked, and on and on and on. So there’s basically widespread vulnerability.

IRA FLATOW: Let’s go to the phones. 844-724-8255, to Washington, DC. Hi, Judy. Welcome to “Science Friday.”

JUDY: Hello?

IRA FLATOW: Hey, there. Yeah, you’re on.

JUDY: Wow, OK. Hi. Thanks for taking my call. The person who was just speaking actually mentioned pacemakers. I was going to ask about that. I was talking with a young man who goes into the OR and calibrates pacemakers. And I asked him whether they’re hackable. And he said as far as he knows, his company’s pacemakers are not hackable, but he does not know across the board whether that’s possible. And since it seems that technology in general gets put forward without examining the downsides, I just wanted to ask your guests what they thought about that kind of thing.

IRA FLATOW: Let me just remind everybody that this is “Science Friday” from PRI, Public Radio International. OK, guests, what do you think? And pacemakers, hackable or not hackable?

ERIC HORVITZ: Yeah, absolute demonstrations of this already. I refer people to Kevin Fu’s work, for example, He’s looked very carefully at Michigan at medical cybersecurity. We find all sorts of interesting problems and challenges. Even pumps, intravenous pumps, have been infected with malware that no one even knows is there. And the pumps are running very slowly. What’s going on?

KATHLEEN FISHER: Jerome Radcliffe showed that he could wirelessly hack his insulin pump to cause it to deliver incorrect dosages of medication. That was reported to “Black Hat” maybe five years ago. And I think when Cheney had his pacemaker implanted, they had the wi-fi functionality turned off. The wi-fi capability is there for a really good reason.

IRA FLATOW: Well, that was the plot on “House of Cards,” wasn’t it? The vice president was killed. His pacemaker was hacked.

KATHLEEN FISHER: Yeah, “Elementary” has had a number of plot lines that revolved around cybersecurity problems. I mean, the functionality exists for a really good reason. It’s very useful that doctors can monitor what’s going on with the pacemaker. So on the whole, it’s a tremendous advantage that the pacemaker is wi-fi enabled. But there is this downside. And my opinion is we need to pay more serious attention to making sure that the security vulnerabilities are addressed upfront, rather than after terrible things have happened.

IRA FLATOW: Eric, let me talk about– we’re coming up to a break. But I want to get one point in about one of the scariest aspects of artificial intelligence. And that’s using these systems in warfare. If by their nature, the systems work in a way that is beyond human comprehension and out of control of humans, how will we know if the information they’re acting on in war time is accurate? It’s really a cause for launching missiles, or just a mistake?

ERIC HORVITZ: This is one of my top concerns right now with– automation has always found its first home in military systems, given the competitive nature of that endeavor. AI, of course as, has led to new capabilities. And of course, it’ll be used in a variety of ways– automation of surveillance, automation of decision-making.

There’s US DOD directive of 2012 that said, we need to have meaningful human control in the loop with military, with weaponry. It’s unclear, as the uses of AI grow in weapon systems, and as the time criticality grows, where the first to strike wins, if we really can ensure we’ll have human, particularly meaningful human control, especially with opaque systems that have to act very quickly.

The other challenge with military that I think is quite destabilizing is, we don’t have very good models typically when we field systems of how interacting AI will work, interacting agents on two sides of an adversarial conflict. The complexity of that, typically, is beyond the ken of the design.

IRA FLATOW: You mean, it’s like a chess game between the AI systems.

IRA FLATOW: At very vast time scales and negative consequences.

SUBBARAO KAMBHAMPATI: And so I think the human being in the loop on all these very fast turn around systems brings up its own question of realisticness. So eventually, I think we almost need AI systems monitoring other AI systems. And that just has to be the way we’ll have to go, because you could have somebody in the loop, and even for something like “Eye in the Sky,” the movie, it was becoming very hard to figure out whether people actually were in the loop in the control. And faster [INAUDIBLE] will make it even more questionable.

I think, really, it’s going to be an arms race. As Kathleen pointed out, we are using these tools because they’re very useful. But everything that’s useful can also be hijacked. And you don’t give up on that. You basically make security and safety a maintenance goal. And you have to use the same technology to work towards ensuring.

IRA FLATOW: We have to take a break. We’ll come back with this really interesting discussion with Kathleen Fischer, Eric Horvitz, and Rao Kambhampati. Our number, 844-724-8255. You can also tweet us, at @SciFri. Lots of tweets coming in. You might want to follow us as we do the show, interesting stuff. We’ll be right back talking more about cybersecurity and artificial intelligence after this break.

This is “Science Friday.” I’m Ira Flatow. We’re talking about the next generation of cybersecurity threats in a world increasingly using artificial intelligence. My guests are Kathleen Fisher, department chair and professor of computer science at Tufts, Eric Horvitz, technical fellow director at Microsoft Research Lab in Seattle. Rao Kambhampati is a computer science and engineering professor at Arizona State University.

Before we begin, a little bit of housekeeping here. Some tweets were coming. “Correct me, Alex Wilding says that the pacemaker episode was on “Homeland,” not on “House of Cards.” Of course. Of course it was. How did I get that wrong?

We have other tweets asking– I’ll just read them off. We’ll go through them later a little bit. “How do you think the recent death of SHA1 plays into this?” That was a computer code that was cracked by hackers. A tweet from Jen says, “Would taking the human element out of driving outweigh the consequences of potential hacking with overall safety rules?” Maybe we need to keep the people up.

Let me follow up on a very interesting point that was brought up right before the break. And that is about can AI protect itself. Do we need AI to watch over AI? Do you agree with Rao, Kathleen, about that?

KATHLEEN FISHER: I think it offers promising possibilities. I think the challenges–

IRA FLATOW: That’s so diplomatic of you.

[LAUGHTER]

KATHLEEN FISHER: Sorry, I’m an academic by training. We’re trained to hedge. No, I think that probably something like that will be essential in that the timescales are such that humans won’t be fast enough to be able to meaningfully intervene in many cases. And in other cases, the timescales will be so long that the humans won’t be able to intervene in meaningful cases. There are examples of people in self-driving cars who just stop paying attention because the car is doing such a great job. People are really bad at paying close attention for a long period of time when it seems superfluous or not useful. So I think either timescale, too short or too fast, means that people won’t really meaningfully be able to monitor. And the alternative is an artificial intelligence system.

The problem is that then you’re limited by the scope of the artificial intelligence system, how you built it, how you trained it, what you anticipated, and what you built into it, or what it was able to infer. The sort of unknown unknowns are an ongoing challenge.

IRA FLATOW: Let’s go to the phones. I’m sorry. Go ahead.

ERIC HORVITZ: Yeah, a quick comment is that there is really fabulously interesting research going on giving AI systems the ability to understand unknown unknowns and to know their own limitations. And this is a direction for making the technology more robust and making even our cars better drivers.

IRA FLATOW: That they would know what the unknowns are. Then they’re not unknowns anymore.

ERIC HORVITZ: They would start to learn about their own blindspots with a layer of reasoning about that in particular.

IRA FLATOW: They would know their limitations. So at some point, they would just signal their driver, like, you take over.

ERIC HORVITZ: As people out there who know me very well, they know that I drive one of these cars. And I’ve pushed it to the limit in terms of its ability to do its own thing versus to scream at me and so on. So it’s very interesting trying to drive one of these self-driving cars, or semi-driving.

IRA FLATOW: How many times have you had to take over from the car?

ERIC HORVITZ: Oh, it’s, of course, all the time. And you’ve got to stay alert.

IRA FLATOW: You’re not giving me a lot of confidence here. Let’s go–

ERIC HORVITZ: Not today. Not today.

KATHLEEN FISHER: It’s early.

ERIC HORVITZ: And I should say, back to that tweeter, it’s definitely the case that when we look at the downside of these technologies, we lose track of the fact that 35,000 deaths per year with human driving, almost a quarter of a million deaths because of preventable errors in hospitals per year in the US alone, AI, in both those places, would provide incredible boosts and save many, many lives. There will be some trades. We have to always consider the upside as well, not the folks on the downside.

IRA FLATOW: Could AI help prevent gun deaths?

ERIC HORVITZ: You know, there’s an interesting department right now that just came together at USC, bringing the Center of Social Work together with the computer science department. It’s one example of other kinds of work going on that are looking at really hard societal challenges. And so without me giving you a flippant yes, of course, you can imagine that we can start thinking about how we could collect data to understand better the root causes of why guns are used and so on, and address them with systems that are datacentric. And so AI might have a role there.

IRA FLATOW: Let’s go to the phones, to Chicago, to the Windy City with Wallace. Hi, welcome to “Science Friday.”

WALLACE: Yes, good afternoon. I was in electronics for a number of years in the old style when we first came with integrated circuits. But oh I’m interested now, and I’m not up to date with the new technology, but why can’t systems prevent hacking? Is it because airwaves are hacking into the system? Or is it direct wire?

KATHLEEN FISHER: So in a car situation, it’s airwaves. They are like five different ways you can hack into a car through airwaves. The reason why it’s, relatively speaking, to break into systems is that getting really good security is a super hard problem. There are many, many dimensions that you have to get right if you want the system to be secure. It’s like an analogy with a house. To make your house secure, it’s not it’s not enough to lock the front door. You have to lock the front door and the back door. And that’s not enough. You have to lock all the windows.

It’s the same thing in a computer. You have to lock every entry way. You have to make sure that your users are well-trained, that they don’t give away the password to somebody who calls on the phone and is convincing that they’re their IT department, and they need to give them the password. You have to have the hardware be correct. There’s just many, many things that you have to get right. And if you don’t get them all right, then there’s a vulnerability that a hacker can break into.

SUBBARAO KAMBHAMPATI: And I think there is no such thing as non-hackable systems eventually. I mean, I keep thinking that before 2001, we did not know that we have to be careful about planes driving into the buildings. That’s just not something that we took into account. In general, I guess, we are going to be exposing interesting kinds of security breaches. And so it’s an ongoing thing, unless you want to just shut yourself off from the world, the systems off from the world, in which case, of course, they’re not of particular use at all.

ERIC HORVITZ: I think it’s important to think too it’s not just about mechanism, machinery, and designs, it’s also about regulation, laws and treaties. For example, we could have treaties about what kind of systems can be fielded in military settings. We could have treaties that say we should not have nation states attacking civilian populations and private infrastructure and democratic institutions with cyberattacks. In fact, just last week, I was proud to say that Microsoft announced in a policy statement that we need to have a digital Geneva Convention. So there’s a lot of people in society in the loop here. It’s not just about fixing the computing.

KATHLEEN FISHER: Indeed.

SUBBARAO KAMBHAMPATI: Except I think one issue there is essentially the technology is there [INAUDIBLE] in CS and AI being extremely open. And so it may not even be just big state actors, like a high school kid sitting in the garage can do lots of interesting damage. And so it is going to be continuing arms race between good and bad users.

IRA FLATOW: Speaking of that, we have an interesting tweet from Vasu, who writes, “Do we currently have any security standards for internet of thing devices? And who is monitoring their evolution?” I mean, we make standards for all kinds of things, antsy things. You know what I’m talking about, those kind of standards. Are there any standards, if you make an internet of thing device, it has to be at least some sort of hack-proof somehow?

KATHLEEN FISHER: Well, hack-proof is a concept that isn’t well-defined. You have to talk about–

IRA FLATOW: Right, it’s not defined yet anywhere.

KATHLEEN FISHER: You always have to talk about hacking with respect to a threat model and what kind of things can you imagine an attacker to do. But no, there are very few standards about the security of those kinds of things. I think the aviation industry takes it the most seriously. But that’s an outgrowth of the fact that they take safety of their codes the most seriously and spend a lot of time doing manual code reviews. I think Senator Markey from Massachusetts proposed a five-star security crash rating for your cars, like the safety ones. But that didn’t go anywhere in Congress. So there’s been some efforts, but nothing that is–

ERIC HORVITZ: But there is work afoot to think through best practices and potentially, one day standards. There recently was created a group called the Partnership on AI in support of people in society — Amazon, Apple, Facebook, Google, DeepMind, IBM, and Microsoft got together and agreed upon a set of tenants and in working together through a nonprofit organization arrayed around best practices. And security and privacy are part of that kind of deliberation.

IRA FLATOW: Let’s go to the phones, to Washington to Jeff. Hi, welcome to “Science Friday.”

JEFF: Hey, Ira. Thanks for taking my call. Love the show. I wanted to know what people were thinking about Isaac Asimov’s three laws of robotics. I know it’s 50-plus years old. But is anybody taking it into consideration when making these security systems?

IRA FLATOW: Good question. Rao, it had been one of the laws, saying you can’t attack people. It will not attack its own human.

SUBBARAO KAMBHAMPATI: Indeed. In fact, I think even several years back, people were talking about the first law of robotics, which is essentially taking the Asimov’s laws and including them in the design of the robot. The interesting question, of course, these are very general laws. And it’s not necessarily obvious to machines at some point of time whether or not actually they are not harming somebody. They trying to help somebody. So there is this whole set of paradoxes people talk about, about the machine assuming that it knows more about what’s good for you than you really know.

And there’s more recent work, actually, a problem, I think, out of Berkeley, in what’s called the off-switch problem, where you should be able to shut off your AI system. This is, I guess, at a much more far-fetched, long-term scenario. The nice thing that I like about what it says really is what bosses do to actually control their employees always keep what is expected somewhat ambiguous. And if you keep that ambiguous, then their work can never be completely sure. And so it allows itself to be shut off. But yes, indeed, Asimov’s laws sort of start becoming the foundation right now.

IRA FLATOW: I got so many questions, so little time. I’m trying to get as many in. Let’s talk about something important, I think, that’s also important to our discussions. And that’s our ability to hold free and fair elections online or by hacking voting machines.

ERIC HORVITZ: You know, before you even go to voting machines, Ira, one of my top concerns is that AI systems will be used and already have been used to automatically and algorithmically persuade people in a stealthy way to change their beliefs and to not vote or to vote differently. And I think this is going to be a very, very important rising crisis for democracy and free thinking.

IRA FLATOW: I’m Ira Flatow. This is “Science Friday” from PRI, Public Radio International. Can you give me an example of that?

ERIC HORVITZ: Well, listen, there’s lots of reports that even out of the last election of a company called Cambridge Analytica that was claiming to have worked very, very diligently at figuring out how to sequence messages to the right people based on their Facebook profiles, at least by report. There were reports back during the 2008 Obama campaign of companies that were doing interesting data mining to figure out how to ideally allocate resources and who to target for changing votes in swing states.

IRA FLATOW: All right, I got to change that. I know. I have so much to talk about. I got to get up. But I want to get to the voting machines. I want to get to the voting machines.

ERIC HORVITZ: Sure.

IRA FLATOW: What are your feelings about that?

KATHLEEN FISHER: Yeah, I think voting machines are susceptible to hacking. I think an interesting thing is that the more you know about computers, the less comfortable you are with electronic voting. Historically, there have been coalitions of computer scientists who’ve gotten involved in the political process to really educate lawmakers about the dangers of mandating electronic voting. Basically, people tend to trust computers to do the right thing, like laypeople who are not into computer science. But in fact, computers do what they’re told to do. And software is designed to be updated and replaced. So a voting machine is going to count according to the latest version of software that’s on it. And that could be the correct version of the software that was installed by the people running the election. But it could be that it was hacked by somebody, or somebody sticks in a thumb drive and changes the code. And then it counts however, the code that was just added.

People have been doing a lot of research in how to build more secure voting systems. It’ll come along with an audit trail that you can check retroactively in a physical way.

IRA FLATOW: Well, how do we supervise this happening or not? How do you surveil this, make sure it doesn’t happen?

KATHLEEN FISHER: Yeah, so the research is, you want to provide a way for the person who voted to be able to confirm that the system recorded the vote the way that they expected in a physical thing so that there’s a physical paper trail they can later go back and check to make sure that the physical vote matched the electronic vote. It’s very important that the voter doesn’t get to show that physical proof to other people because that opens up the possibility of buying votes. So it’s a very delicate combination of computer science and algorithms and social processes and our understanding of votes.

So it’s not something to be done casually. It has, again, huge upsides. It would allow our military people to vote when they’re overseas. There’s lots of upsides to it. But it has to be done very carefully. And I’m concerned that it’s not being done carefully to date.

IRA FLATOW: I have to stop this conversation. This is great. We’ll have to continue this. I’m running out of time. I want to thank Kathleen Fisher, Eric Horvitz, and Subbarao Kambhampati. And thank you all. And have a great meeting at Arizona State University this weekend.

Copyright © 2017 Science Friday Initiative. All rights reserved. Science Friday transcripts are produced on a tight deadline by 3Play Media. Fidelity to the original aired/published audio or video file might vary, and text might be updated or amended in the future. For the authoritative record of ScienceFriday’s programming, please visit the original aired/published recording. For terms of use and more information, visit our policies pages at http://www.sciencefriday.com/about/policies/

Christie Taylor was a producer for Science Friday. Her days involved diligent research, too many phone calls for an introvert, and asking scientists if they have any audio of that narwhal heartbeat.

Christopher Intagliata was Science Friday’s senior producer. He once served as a prop in an optical illusion and speaks passable Ira Flatowese.