How Innovation Happens in the Digital Age

An excerpt from “The Innovators.”

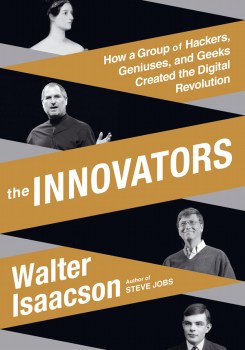

The following is an excerpt from Walter Isaacson’s The Innovators.

The computer and the Internet are among the most important inventions of our era, but few people know who created them. They were not conjured up in a garret or garage by solo inventors suitable to be singled out on magazine covers or put into a pantheon with Edison, Bell, and Morse. Instead, most of the innovations of the digital age were done collaboratively. There were a lot of fascinating people involved, some ingenious and a few even geniuses. This is the story of these pioneers, hackers, inventors, and entrepreneurs—who they were, how their minds worked, and what made them so creative. It’s also a narrative of how they collaborated and why their ability to work as teams made them even more creative.

The tale of their teamwork is important because we don’t often focus on how central that skill is to innovation. There are thousands of books celebrating people we biographers portray, or mythologize, as lone inventors. I’ve produced a few myself. Search the phrase “the man who invented” on Amazon and you get 1,860 book results. But we have far fewer tales of collaborative creativity, which is actually more important in understanding how today’s technology revolution was fashioned. It can also be more interesting.

We talk so much about innovation these days that it has become a buzzword, drained of clear meaning. So in this book I set out to report on how innovation actually happens in the real world. How did the most imaginative innovators of our time turn disruptive ideas into realities? I focus on a dozen or so of the most significant breakthroughs of the digital age and the people who made them. What ingredients produced their creative leaps? What skills proved most useful? How did they lead and collaborate? Why did some succeed and others fail?

I also explore the social and cultural forces that provide the atmosphere for innovation. For the birth of the digital age, this included a research ecosystem that was nurtured by government spending and managed by a military-industrial-academic collaboration. Intersecting with that was a loose alliance of community organizers, communal-minded hippies, do-it-yourself hobbyists, and homebrew hackers, most of whom were suspicious of centralized authority.

Histories can be written with a different emphasis on any of these factors. An example is the invention of the Harvard/IBM Mark I, the first big electromechanical computer. One of its programmers, Grace Hopper, wrote a history that focused on its primary creator, Howard Aiken. IBM countered with a history that featured its teams of faceless engineers who contributed the incremental innovations, from counters to card feeders, that went into the machine.

Likewise, what emphasis should be put on great individuals versus on cultural currents has long been a matter of dispute; in the mid-nineteenth century, Thomas Carlyle declared that “the history of the world is but the biography of great men,” and Herbert Spencer responded with a theory that emphasized the role of societal forces. Academics and participants often view this balance differently. “As a professor, I tended to think of history as run by impersonal forces,” Henry Kissinger told reporters during one of his Middle East shuttle missions in the 1970s. “But when you see it in practice, you see the difference personalities make.” When it comes to digital-age innovation, as with Middle East peacemaking, a variety of personal and cultural forces all come into play, and in this book I sought to weave them together.

The Internet was originally built to facilitate collaboration. By contrast, personal computers, especially those meant to be used at home, were devised as tools for individual creativity. For more than a decade, beginning in the early 1970s, the development of networks and that of home computers proceeded separately from one another. They finally began coming together in the late 1980s with the advent of modems, online services, and the Web. Just as combining the steam engine with ingenious machinery drove the Industrial Revolution, the combination of the computer and distributed networks led to a digital revolution that allowed anyone to create, disseminate, and access any information anywhere.

Historians of science are sometimes wary about calling periods of great change revolutions, because they prefer to view progress as evolutionary. “There was no such thing as the Scientific Revolution, and this is a book about it,” is the wry opening sentence of the Harvard professor Steven Shapin’s book on that period. One method that Shapin used to escape his half-joking contradiction is to note how the key players of the period “vigorously expressed the view” that they were part of a revolution. “Our sense of radical change afoot comes substantially from them.”

Likewise, most of us today share a sense that the digital advances of the past half century are transforming, perhaps even revolutionizing the way we live. I can recall the excitement that each new breakthrough engendered. My father and uncles were electrical engineers, and like many of the characters in this book I grew up with a basement workshop that had circuit boards to be soldered, radios to be opened, tubes to be tested, and boxes of transistors and resistors to be sorted and deployed. As an electronics geek who loved Heathkits and ham radios (WA5JTP), I can remember when vacuum tubes gave way to transistors. At college I learned programming using punch cards and recall when the agony of batch processing was replaced by the ecstasy of hands-on interaction. In the 1980s I thrilled to the static and screech that modems made when they opened for you the weirdly magical realm of online services and bulletin boards, and in the early 1990s I helped to run a digital division at Time and Time Warner that launched new Web and broadband Internet services. As Wordsworth said of the enthusiasts who were present at the beginning of the French Revolution, “Bliss was it in that dawn to be alive.”

I began work on this book more than a decade ago. It grew out of my fascination with the digital-age advances I had witnessed and also from my biography of Benjamin Franklin, who was an innovator, inventor, publisher, postal service pioneer, and all-around information networker and entrepreneur. I wanted to step away from doing biographies, which tend to emphasize the role of singular individuals, and once again do a book like The Wise Men, which I had coauthored with a colleague about the creative teamwork of six friends who shaped America’s cold war policies. My initial plan was to focus on the teams that invented the Internet. But when I interviewed Bill Gates, he convinced me that the simultaneous emergence of the Internet and the personal computer made for a richer tale. I put this book on hold early in 2009, when I began working on a biography of Steve Jobs. But his story reinforced my interest in how the development of the Internet and computers intertwined, so as soon as I finished that book, I went back to work on this tale of digital-age innovators.

The protocols of the Internet were devised by peer collaboration, and the resulting system seemed to have embedded in its genetic code a propensity to facilitate such collaboration. The power to create and transmit information was fully distributed to each of the nodes, and any attempt to impose controls or a hierarchy could be routed around. Without falling into the teleological fallacy of ascribing intentions or a personality to technology, it’s fair to say that a system of open networks connected to individually controlled computers tended, as the printing press did, to wrest control over the distribution of information from gatekeepers, central authorities, and institutions that employed scriveners and scribes. It became easier for ordinary folks to create and share content.

The collaboration that created the digital age was not just among peers but also between generations. Ideas were handed off from one cohort of innovators to the next. Another theme that emerged from my research was that users repeatedly commandeered digital innovations to create communications and social networking tools. I also became interested in how the quest for artificial intelligence—machines that think on their own—has consistently proved less fruitful than creating ways to forge a partnership or symbiosis between people and machines. In other words, the collaborative creativity that marked the digital age included collaboration between humans and machines.

Finally, I was struck by how the truest creativity of the digital age came from those who were able to connect the arts and sciences. They believed that beauty mattered. “I always thought of myself as a humanities person as a kid, but I liked electronics,” Jobs told me when I embarked on his biography. “Then I read something that one of my heroes, Edwin Land of Polaroid, said about the importance of people who could stand at the intersection of humanities and sciences, and I decided that’s what I wanted to do.” The people who were comfortable at this humanities-technology intersection helped to create the human-machine symbiosis that is at the core of this story.

Like many aspects of the digital age, this idea that innovation resides where art and science connect is not new. Leonardo da Vinci was the exemplar of the creativity that flourishes when the humanities and sciences interact. When Einstein was stymied while working out General Relativity, he would pull out his violin and play Mozart until he could reconnect to what he called the harmony of the spheres.

When it comes to computers, there is one other historical figure, not as well known, who embodied the combination of the arts and sciences. Like her famous father, she understood the romance of poetry. Unlike him, she also saw the romance of math and machinery. And that is where our story begins.

Excerpted from The Innovators: How a Group of Hackers, Geniuses, and Geeks Created the Digital Revolution by Walter Isaacson. Copyright © 2014 by Walter Isaacson. Reprinted by permission of Simon & Schuster, Inc. All Rights Reserved

Walter Isaacson is the author of Elon Musk, and a professor of History at Tulane University in New Orleans, Louisiana.