Predicting the Future of Robotics

34:39 minutes

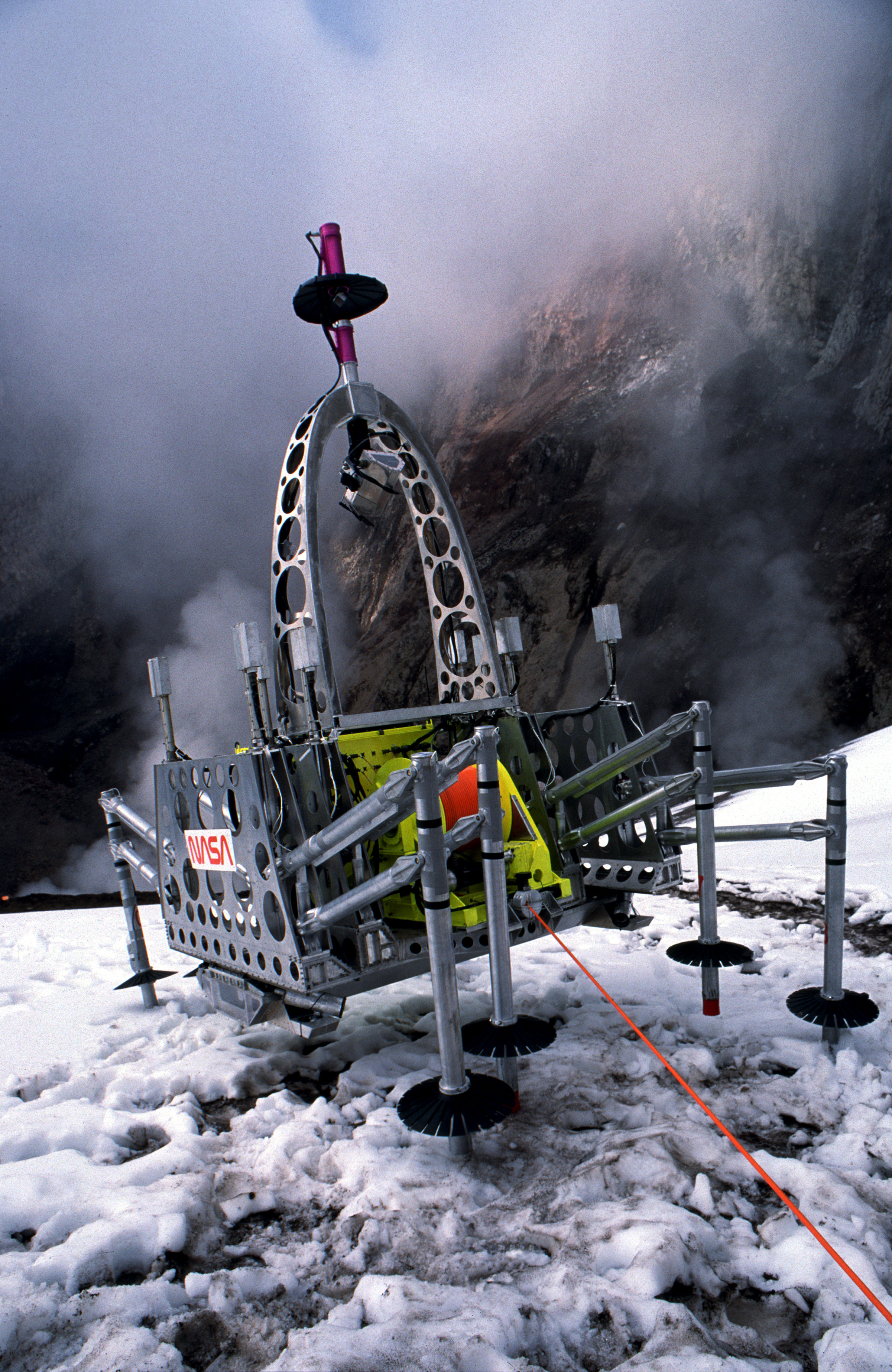

In 1994, Dante II, a 1,700 pound robot built by Carnegie Mellon and NASA, rappelled into the volcano of Mt. Spurr at the rate of one centimeter per minute. The team wanted to test the capabilities of autonomous systems and robotic mobility at the time. In August of that year, Science Friday brought together a group of experts to discuss the trends in robotics: moving from complicated,highly programmed machines to smaller swarms that could be interconnected, where robots could be useful and the ethical dilemmas the technology. One of those guest was Charles Thorpe, a senior research scientist at the Robotics Institute, who was working on developing an autonomous car. He returns to Science Friday to discuss how far robotics have come in the last two decades.

And as engineers build squishy biological-machine hybrids, with mouse muscles and sea slug mouthparts, how far are we from creating truly living machines? A look at the future of ‘bio-bots’ and the unintended consequences of combining flesh, neurons, and mechanical parts.

Hillel Chiel is a professor of biology, neurosciences, and biomedical engineering at Case Western Reserve University in Cleveland, Ohio.

Charles Thorpe is the Senior Vice President and provost at Clarkson University. He’s based in Potsdam, New York.

Ritu Raman is a mechanical engineer and postdoctoral fellow at Massachusetts Institute of Technology. She’s based in Cambridge, Massachusetts.

IRA FLATOW: This is Science Friday. I’m Ira Flatow. This year, we’re celebrating Science Friday’s 25th year– hard to believe. We’re doing that, celebrating with visiting a few of our conversations from the archive, conversations about big science issues that have popped up in the past two decades. And as you know, robots have always been a favorite topic for science geeks.

And back in 1994, the pop culture idea of robots was dominated by the human-like, artificially intelligent terminator. This was just three years after Terminator 2– Judgment Day. So we were thinking about that. And the reality of robot technology wasn’t exactly, though, The Terminator. The top research robot at that time was called Dante II. It was a 1700 pound robot that walked at a rate of one centimeter per second, kind of crawled.

And Dante II was built by NASA and Carnegie Mellon. And the team sent it into a volcano to put that technology to the test. And in 1994, I talked to Mark Rosheim, who was an author and engineer, and Charles Thorpe, who was a research scientist at the Robotics Institute at Carnegie Mellon. And in this clip, Dr. Thorpe describes Dante II and another research project he was working on at the time.

Dante II is safe. I say Dante II is safe. A little bit worse for wear, but safe. Dante II is the robot that successfully climbed down a volcano in Alaska but fell over it, trying to get out and was finally rescued this week by helicopter. Dante is now awaiting a reunion with its loved ones from the Carnegie Mellon Robotics Institute in Pittsburgh.

Dante was designed to climb in and out of volcanoes or the craters of planets, where humans fear to tread or find it too impractical to go themselves. Charles Thorpe is a senior research scientist with the Robotics Institute of Carnegie Mellon University in Pittsburgh and head of the Nav Lab project there, charged with building robot trucks. He joins us from station WQED in Pittsburgh. Welcome to the program.

CHARLES THORPE: Well, thank you, Ira. How are you doing this afternoon?

IRA FLATOW: Fine. How are you today?

CHARLES THORPE: Pretty good.

IRA FLATOW: Great. Let me see if I can get an update on Dante II first, Dr. Thorpe. Where is Dante these days? Is it still out there in Alaska?

CHARLES THORPE: Dante is coming back from Alaska on the slow boat.

IRA FLATOW: Literally.

CHARLES THORPE: Literally. The people who went up to Alaska are coming back now, and I’ve seen some of them just starting to show up on campus the last couple days. But the robot itself, there’s no particular hurry to bring it back. So it’s coming back by slower means.

IRA FLATOW: And that is one of the things that robots do these days. They go into places where people fear to tread– dangerous places like volcanoes. But they might also work in– they’ve been sent into nuclear power plants, places like that.

CHARLES THORPE: Oh, sure. When we started working on mobile robots, our big emphasis was working on robots in hazardous environments. So we’ve had a couple teleoperated vehicles down in the basement of Three Mile Island. There have been other robots in a variety of other nuclear facilities. But some of our attitude is changing a little bit. We’ve just realized in the last couple years that highways are a pretty hazardous environment.

So we have some work now with the Department of Transportation trying to see if we can use the same technology that drives robots just to watch how people drive and to do things like wake them up if they’re starting to fall asleep and drift off the road.

IRA FLATOW: I was just reading in an article that scientists have just discovered how people turn a car, how they watch the angle of the curve so they know how to make that turn. And that was done specifically to teach a robot how to drive a car itself, what to look for as the cues on the road to make that turn.

CHARLES THORPE: There is some very interesting work on how people steer, and there’s also some very interesting work on what are people looking at. It’s not just a matter of, once you know where the road goes, how do you steer to get around there? It’s a matter of, where are people looking? Are they looking at lines? Are they looking at other cars? Are they looking at shoulders? How are they interpreting the images?

IRA FLATOW: You’re building a robot truck, is that correct, working with a robot truck?

CHARLES THORPE: That’s right. I have a couple of robot trucks called the Nav Lab project, for Navigation Laboratory, a robot truck and a robot Humvee.

IRA FLATOW: Why would you have one? I can see for military purposes, instead of a soldier you’d send a robot out.

CHARLES THORPE: That was the original purpose was to study just basic driving technology for the military. They want to see things like, if I can get a pair of eyeballs over the next hill and see what’s out there, or can I find where the landmines are without endangering people’s lives? But we also have this work now from the Department of Transportation, which is looking at using the same robot, using some of the same techniques, but using it to say, how would you have to drive if you really wanted to help people?

IRA FLATOW: That was Charles Thorpe back there in 1994. We’re joining again. Charles Thorpe joins us to talk about how far robotics have come in the last 22 years and where the technology might go in the future. Now he’s vice president and provost of Clarkson University in Potsdam, New York. Welcome back.

CHARLES THORPE: Well, Ira, thanks for having me back. You never warned me in ’94 that you were going to give me a pop quiz 20 years later.

IRA FLATOW: Seems like yesterday, doesn’t it?

CHARLES THORPE: It does, until you look at what’s happened in robots since 1994.

IRA FLATOW: Well, let’s talk about– you were working on that self-driving car way back then. Was it was completely autonomous? Is it different idea than what people are working on now?

CHARLES THORPE: We had the basics of the completely autonomous vehicle way back then. But we were also starting to work on, if it’s not completely good enough for prime time, how do you use all of that robotic technology to watch when you’re driving and keep you from becoming an accident statistic? So it’s been very gratifying to see the technology we were working on 22 years ago start to save lives, start to wake people up who are drifting off the road, start to do emergency braking and just the beginnings, with people like Tesla, of automated driving.

IRA FLATOW: Let’s talk about that robot in the volcano, Dante II. Was that robot an important discovery today or device that you created? Did it teach you something that we’re still using today?

CHARLES THORPE: Dante II is very important for three reasons. One is the mechanical design of the thing. How do you rappel down into very rugged terrain? Second is the sensing– how do you look around and see where the rocks are and decide where to put your feet? But the third really important one is the user interface. NASA was sitting at home in Pasadena, California, controlling this thing as it went down into a volcano in Alaska, trying to practice what it would be like to sit at home in Pasadena and control a robot that’s exploring a lava tube on the moon.

IRA FLATOW: Our number, 844-724-8255 if you’d like to talk about robots and Dante and where we’ve been in the last 20 years, 22 years. Also you can tweet us at scifri. Where do you think robots are headed now, and the main gist of getting self-driving cars, is that the main thrust that we’re seeing today?

CHARLES THORPE: Well, it certainly is one of the areas, using this robotic technology in everything from self-driving cars to assistance for visually impaired people to better factories. But there are some surprises that came along that we didn’t expect. We expected this gradual growth of rough terrain robots, this fairly rapid growth of factory robots. Nobody in ’94 anticipated the Roomba.

There are now, I believe, about eight times as many robot vacuum cleaners as all other robots in the world put together. And you can go out and find 200 videos of cats riding around on their Roombas on YouTube. The notion of getting a robot into every home and getting people to treat them like they’re pets and getting the pets to treat them like they’re pets, that was something that we really hadn’t anticipated. And that’s pretty exciting.

IRA FLATOW: Because in The Jetsons, we saw robots walking around, cleaning up with a vacuum cleaner, but not that kind of vacuum cleaner.

CHARLES THORPE: Not that kind of vacuum cleaner, but that’s one of these interesting illustrations, that the notion of a robot vacuum cleaner in ’94 was a humanoid robot pushing a vacuum cleaner. And the notion of a robot chauffeur in ’94 was a humanoid robot sitting behind the wheel of a car. We find that as the robotic technology gets better, it’s harder and harder to see. Roomba kind of looks like a flying saucer. Doesn’t look like a robot. A Tesla, you can’t even see the self-driving technology. It’s all buried under the hood and behind the grill.

IRA FLATOW: And I want to expand our conversation to bring on a few more guests as we continue our robot tour. And these are people who are building things that look almost like half-animal and half-machine. They’re called biobots, a hybrid of animal cells and mechanically printed 3-D printed parts that sort of make you wonder whether we should start calling these things living machines. Let me bring them on and we’ll talk about it, see what I mean. Hillel Chiel is a professor of biology, neurosciences, and biomedical engineering at Case Western Reserve University in Cleveland. Welcome to Science Friday.

HILLEL CHIEL: Hi Ira. Nice to talk with you.

IRA FLATOW: You’re welcome. Ritu Raman is a mechanical engineer at the University of Illinois at Urbana-Champaign. Welcome to Science Friday.

RITU RAMAN: Hi, Ira. Happy to be here.

IRA FLATOW: Hillel, tell us what you have built here. It’s what, part sea slug and part what?

HILLEL CHIEL: Well, let me say first that the actual people who were the key engineering people, Vicki Webster was the grad student on this, and Roger Quinn who I’ve collaborated with for years, they would probably be talking to you but they’re at the Living Machines Conference that’s happening in Edinburgh right now. So that’s why I’m here. I’m the biologist.

We’ve for years been studying the sea slug, aplysia californica, and how it feeds. And we had this rather crazy idea that we could take a muscle from the feeding apparatus and put it onto a 3-D printed framework, put that together, stimulate it electrically, and get something that walked. And it worked.

IRA FLATOW: So you stimulate the sea slug and it moves and it has mechanical parts on it?

HILLEL CHIEL: Well, actually, no. This is just a muscle from the sea slug and a 3D printed framework around it, which is what provides the friction, so that when the muscle contracts from the electrical stimulation, one part moves forward and then the other part moves forward.

IRA FLATOW: Wow. And what do we call this? What do we call this–

[INTERPOSING VOICES]

HILLEL CHIEL: We call it a biobot. But a hybrid robot is another term that people are using.

IRA FLATOW: Dr. Raman, your team took a different approach. Instead of using an existing animal part of your bot, you actually built the part from scratch using mouse cells. Tell us about that, please.

RITU RAMAN: Sure. So the mouse cells that we use are optogenetic, which means that they have been genetically engineered to respond to blue light. So they contract whenever you shine blue light on them. So we take these cells that have been engineered and we mix them with a bunch of different types of proteins that mimic the extracellular matrix inside the body. So the cells, when they’re around these proteins, they basically compact to form a 3D muscle ring or rubber band-like structure that contracts the way that real muscle that you could excise from an animal does.

So then we do a similar thing where we couple the muscle that we’ve engineered to a 3D printed skeleton made of a soft compliant plastic. And then we can trigger stimulation, blue light stimulation, and muscle contraction.

IRA FLATOW: Wow. So you make the muscle out of the stem cells?

RITU RAMAN: They’re not stem cells. They’re just mouse cell line.

IRA FLATOW: Can you do it with other parts of the body besides a muscle?

RITU RAMAN: Yeah. We are working on other cell types as well, for example, trying to use neurons to control the muscle and let it make decisions about when it wants to contract, as well as working with vascular cells or cells from blood vessels to make sure that we can efficiently transport nutrients into the biobots. We started with muscle, because force production and walking is just the most exciting thing. And people like to see that.

IRA FLATOW: Wow. And how do you get them to contract? What is the stimulus you use?

RITU RAMAN: It’s just blue light flashing from an LED. So every time the blue light flashes, an ion channel that we’ve put inside the cell membrane opens. Ions flow in, and the muscle takes that as a signal to contract.

IRA FLATOW: Let me just remind everybody, I’m Ira Flatow. This is Science Friday from PRI, Public Radio International, talking about this strange new world of biobots with Charles– with Hillel Chiel and Ritu Raman. This is really, really kind of interesting stuff. What do you think about these biobots, Charles Thorpe? Did you imagine that we’d be putting robotics into sea slugs, that engineers would become biologists, basically?

CHARLES THORPE: Well, we’ve just frustrated everybody who plays 20 Questions and want to know, is this animal, mineral, or vegetable?

IRA FLATOW: That’s what I was wondering. And at what point do we call them alive or something?

CHARLES THORPE: Well, this is the step toward cyborgs. What is this mix of artificial parts and biological parts? And we know that there are some things that the world of biology does really, really, really well that we haven’t figured out how to do mechanically. And so some of this coupling is going to be really exciting.

IRA FLATOW: Ritu, could you control a fleet of these bots remotely and make them work together somehow?

RITU RAMAN: Yeah, that’s actually some of the motivation behind the work we do. It’s part of a larger NSF center called the Emergent Behavior of Integrated Cellular Systems, or EBICS. So part of what we want to do is learn, both on the small scale and on the large scale when many of these biobots are interacting with each other, what kind of more complex behaviors can emerge from those interactions.

IRA FLATOW: Hillel, I saw the movie and you saw the video about these slug bots, I’m going to call them. They don’t move terribly fast.

HILLEL CHIEL: No. But one of the comments I would make is that slugs in general don’t move fast. But anyone who has a vegetable garden knows that moving slowly is not a bar to finding your goals, if you have time.

IRA FLATOW: Why was the slug chosen as the animal to use?

HILLEL CHIEL: One of the things that makes aplysia very convenient for this is it’s an intertidal animal. That means that as the tide goes out, they’re left in these little tide pools. And the consequence of that is that the sun may be down. The temperature goes up. It may rain, and so the osmolarity changes. And so they have to be pretty robust.

And so that means that they can handle a whole range of temperature and other conditions that cells from animals like ourselves have much more difficulty with. Our cells are used to being at about 37 degrees centigrade, and 98.6 Fahrenheit. And if you’re much out of that range, they die. Aplysia neurons can, and muscle cells can survive from relatively cold temperatures, six, seven degrees centigrade, up to a hot day in Las Vegas, probably. They still need water, but they can manage. They can manage quite well.

IRA FLATOW: I know Eric Kandel won a Nobel Prize for his work with the sea slugs.

HILLEL CHIEL: That’s right. I actually did my post-doc with someone who had been a post-doc with him.

IRA FLATOW: I think he did it because they had giant neurons, nerve cells.

HILLEL CHIEL: Exactly. And that’s another very exciting aspect. We’re hoping to actually use some of the organized collections of nerve cells, the ganglia in these animals, as a basis for control. One of the difficulties you run into– and it’s interesting. I am collaborating also with [? Ozon ?] [? Akos ?] and [? Uma ?] [? Gerken ?] who are trying to do the kind of bioengineering that we’re talking about here, where you create scaffolding and put cells in, and so on.

One of the difficulties is, you’re trying to reverse engineer the developmental process. And we still don’t understand how that works exactly. So sometimes, using something that’s the end product may be faster or more effective than trying to reverse engineer it ourselves. So these ganglia could be used for control, we think. And that’s what we’re going to hope to do.

IRA FLATOW: All right. We’re going to take a break and come back and talk lots more about these biobots. We don’t really have a good name for them yet. Maybe we’ll coin one here on Science Friday. Our number, 844-724-8255. You can also tweet us at scifri. Talking with scientists, going back in the vault looking back to 1994, we’ll hear a few more excerpts from our show in 1994 about robotics.

And let us know what you think about it. Stay with us. We’ll be right back after the break. This is Science Friday. I’m Ira Flatow. We’re talking this hour about robots, how far has the technology come in the past two decades? What will it look like in the future? It’s all part of our celebration of Science Friday’s 25th anniversary.

My guests are Charles Thorpe, vice president and provost of Clarkson University in Potsdam, New York, Hillel Chiel, professor of biology, neurosciences and biomedical engineering at Case Western Reserve University in Cleveland. Ritu Raman is a mechanical engineer at the University of Illinois at Urbana-Champaign. So we’re going to go back to the way back machine, back again with our conversation with Charles Thorpe back in 1994. Remember, Charles, you and Mark Rosheim discussed what shape future robots might take.

Mark Rosheim, you brought up the term anthrobot, and your book focuses on anthrobots. What is your definition of the difference between a robot and an anthrobot? Does it have to look human?

MARK ROSHEIM: Well, it has to look human to function like a human. You can’t have human functioning hands that don’t look to some extent like a human hand.

IRA FLATOW: Could you not improve on humans, though?

MARK ROSHEIM: Well, you might in power and strength. But as far as physical dexterity, you got to appreciate how beautifully complex the human hand is and the human body. You could argue– I’ve heard these stories all over the years about, we can make a robot like a squid or something. Well, squids can’t drive cars and squids don’t work– you would have a very hard time working in a factory. So I really see the human template as the most sophisticated template and most important to emulate.

IRA FLATOW: And all the time, people are trying to emulate the human body and recreate different parts them that mimic human parts. Because obviously, that that’s been evolving for millions, perhaps billions of years, and who knows? Everything’s been tried maybe sometime in the past.

MARK ROSHEIM: Well, that’s sort of yes and no. A lot of the emphasis for building humanoids is if you want them to perform humanlike tasks. So for instance, if you want to go into some of these old nuclear reactors, the robot better be able to walk up and down stairs. And it better be about the size of a human, because that’s the size that the whole thing was designed to be serviced by and visited by.

On the other hand, if what you want to do is to have a robot truck, you don’t want to have something that looks like an anthropomorphic being sitting there taking up the driver’s seat. You want to save all of the seat room for the passengers. You want the smarts to be hidden underneath the hood and in the trunk, next to the CD changer and make it look much more like a car. Because that’s what is really is a smart car, and not make it look like an anthropoid.

IRA FLATOW: That CD changer remark tells you it was back in 1994, that conversation with Charles Thorpe. Charles is here with us. Charles, is there still focus on building those big, complicated robots? How is the thinking about shape and design of robots– how is that changing at this time?

CHARLES THORPE: Well, one of the things that we did not succeed in the robotics world was the Fukushima nuclear reactor. First of all, who would have predicted that there would be a tsunami and an earthquake and a nuclear reactor right next to the ocean? We should have seen that one coming. We were late with the robotic response to Three Mile Island. We were late with the robotic response to Chernobyl.

The robots that were ready to go into Fukushima had tracks and looked like it tracked vehicles and were unable to get up and down the stairs, unable to see what they needed to see. When I was working in the White House, I called a meeting of the robotics people and said, why did we have such bad response to Fukushima, and how could we change that going forward? The result of that little workshop was the DARPA grand challenge that led to a bunch of humanoid robots that could walk, that could drive an ATV, that could turn a doorknob, that could go up and down stairs, that could operate in the built environment of a place like Fukushima.

So that’s one answer is there continues to be this thrust of building humanoid robots for humanoid environments. This is also a place where if you had really good biobots, a sea slug could crawl up and down those stairs and have no problem at all.

IRA FLATOW: Anybody else want to comment?

HILLEL CHIEL: Yeah, I was just going to jump in, because one of the things that octopi are really good at as complex manipulation. And having tentacles is really helpful, especially if you have to squeeze your way through– well, let’s imagine a building has been partially wrecked by an earthquake. You don’t necessarily have stairs anymore.

You may have pieces of rubble. You may have stones or other things in the way. And now being a soft device that can actually squeeze its way through could be very, very helpful. So yeah, I think if you had the right level of intelligence and you had a device that was soft, there could be some advantages to that.

IRA FLATOW: Ritu, do you have a comment?

RITU RAMAN: Yeah. I also think when we think about humanoid, we’re thinking about the whole body, and can we replicate that? And that might be a little bit far off in the future for a lot of us. But you can still take parts of the human body and be inspired by that. So for example, for our biobots, if you look at something like the elbow, where you have two bones that are connected by a joint and every time the muscle contracts, you get the joints moving closer together.

So that’s very similar to the biobot that we designed where the beam is very flexible and sort of mimics a joint. And then you have two legs that connect the muscle together. So I think when we think about anthropomorphic, it doesn’t have to be on the macro scale all the time.

IRA FLATOW: Our number, 844-724-8255. Let’s open the phones, and some interesting comments from our listeners. Gainesville, Florida– Martin, you’re up first. Welcome to Science Friday.

MARTIN: Great, thanks for taking my call. I just have a quick question, and then I’ll hang up and listen for the answer. What sort of ethical standards are being applied to this research? It seems like it’s very fast emerging, much like genetic engineering has been. Could you discuss briefly what sort of ethics apply, and how do you decide what direction you’re going to go in regarding biobots?

IRA FLATOW: All right. Anybody want to jump in?

[INTERPOSING VOICES]

Ritu, you go first.

RITU RAMAN: Sure. So as I said, our research is part of a National Science Foundation Center. And one big requirement of that grant and of all the researchers that work on it is that every year, we meet at a retreat. That’s happening next week, actually. And we come up with different sort of ethical modules or vignettes and discuss situations of what happens if biobots do this? What happens if biobots start doing that? What kind of engineering controls can we implement to make sure that not only are we doing interesting designs, but we’re doing responsible and safe designs?

HILLEL CHIEL: I just wanted to add that back in 1975, the Asilomar conference that Paul Berg and Maxine Singer put together was a very important way to start to address the questions of recombinant DNA and its implications. And I strongly agree that having those kinds of conferences by people who are engaged in this area is a wise thing to do, as soon as possible.

One of the things that emerged is that if you have appropriate rules and regulations, it helps an enormous number of ways in terms of creating commercially viable products, reducing people’s anxiety, and also allowing people to be heard about their concerns, which is very important from the very beginning.

IRA FLATOW: We have a tweet in from Ramsey [? Karam ?], who says, do robotics engineers take into consideration the uncanny valley thing when discussing anthrobots? The uncanny valley thing?

CHARLES THORPE: Sure. The uncanny valley is a phenomenon that we didn’t expect.

IRA FLATOW: Explain what that is, for people that don’t know.

CHARLES THORPE: You start out with a cute doll robot. People say, aw, isn’t that a cute doll. If you make the cute doll a little more lifelike, you put real human hair on there, you say, oh, that’s an even cuter doll. When you make it a little more lifelike and it starts to blink its eyes and turn its head and coo, all of a sudden it’s so close to lifelike it becomes zombie-like.

So the theory is if you make a robot really human, it’ll be cool. If you make it really not human, it’s cool. If there’s some place in between, where hit the value of coolness and it scares the bejabbers out of you. Nobody completely understands what exactly that is that it triggers. But it’s the point where you really expect this to have fully human responses, and it’s not quite. That’s the scary part.

IRA FLATOW: It’s creepy.

CHARLES THORPE: It is. And that’s one reason why you might want to make robot dogs or something, where you don’t have that same expectation of truly human responses.

IRA FLATOW: And now you’re going to offend all the dog lovers today, Charles.

MARK ROSHEIM: Robot slugs are not going to be much of a problem.

IRA FLATOW: Let me go back to a little bit of a back in the way back to 1994, back to that conversation you and I had, Charles. We had a caller who had a question about artificial intelligence and how future robots might learn tasks. Let’s go to Noah in Berkeley. Hi. Welcome, Noah.

NOAH: Hi. I have to go back to Isaac Asimov, because he wrote a lot of science fiction about robots. And he used the term positronic [? bit ?] brain a lot. That was the basis for his robot’s intelligence. So I’m wondering, are people today moving towards making computers maybe that run robots more like the neural networks that we have in our own bodies?

HILLEL CHIEL: The whole field of neural nets is loosely inspired by the human brain. So if you look at a standard computer like a PC, the usual explanation is that it does one instruction at a time. And if you look at the way our brain works, it’s not nearly as fast as a computer. But there are lots of things happening in parallel.

So neural nets, which are a new topic in computer science and robotics, are sort of loosely inspired by that idea of doing a lot of things in parallel. They don’t really look nearly as complicated as a human neuron. They don’t have all the dendritic chains and so forth. But it’s the idea of parallel processing, of changing weights, of trying to learn things rather than program things and so forth.

IRA FLATOW: Charles, back then neural networks were a very hot topic, if you recall. Is it still?

CHARLES THORPE: Neural nets were a huge advance, because it was one of the ways of learning really complicated things. One of my robots learned if this is what the image looks like, here’s where you have to turn the steering wheel and was able to learn in about two minutes how to drive on any given road. Since then, there have been lots of other techniques developed like neural nets to analyze big patterns.

The real question that we still have going on in artificial intelligence is, how much of it is just accumulating an awful lot of patterns, and how much of it is conscious thinking and rules and so forth? If you look at something like Watson, part of the success of Watson was just the enormous amount of data that it had access to, and some of the ways of looking at the patterns. But more than that, the rules for accessing it.

IRA FLATOW: Ritu, any comment? Don’t have to. While you collect your thoughts– I know I scared you by surprise. I’m Ira Flatow. This is Science Friday from PRI, Public Radio International. Let me ask you a different question, because I’m running out of time.

HILLEL CHIEL: Could I jump in just for a sec? One of the things that I should comment on is that neural nets– this goes back to Pitts and McCulloch– the experience often the biologists have with engineers is they come, they ask a few questions. They say, great, thanks. And then they disappear for a while. And they do some cool things, but neurons are actually incredibly complicated. An individual neuron has the complexity, really, of what we think of as a microprocessor.

And that’s not what’s going on in neural nets right now. If you started to put that kind of complexity in and provided some of the flexibility that you actually see, even in slug brains, but certainly in ours, I think there might be even more exciting things that neural networks could do.

IRA FLATOW: Well, you led to the question I was thinking of, thinking how complex even those individual neurons are, Ritu. I don’t want to get spooky here, but when you build with biology, isn’t it possible that some behavior could emerge that you didn’t predict? Something’s greater than the sum of the parts?

RITU RAMAN: Yeah, absolutely. That’s the definition of emergent behavior. And the idea is that of course, there’s these small individual components, and something happens on the macro scale that we can’t predict from the smaller parts. But if you talk to people sometimes from physics or developmental biology, they’ll say, no, you actually can predict those things. We just don’t understand the rules yet.

So I think part of what we need to do as a community of researchers is be open to the idea of trying these things, having engineering controls in place to make sure that we can control for any kind of unforeseeable outcome, but learn how we can control them and not just say, we never know what’ll happen. We don’t know what’s going to happen now, so we need to study it and make sure that we can understand those rules for the future.

IRA FLATOW: When we were talking just now, when you said– I can’t remember who said it– that the neuron in the slug is as complicated as a computer chip–

HILLEL CHIEL: That’s what I said, yeah.

IRA FLATOW: Does that imply that we can program the neuron like a computer?

HILLEL CHIEL: In fact, people are starting to do things like that using optogenetics. They have actually shown that you can implant memories into a mouse’s brain using the appropriate stimuli, and you can bypass the normal sensory inputs by appropriately activating the neurons at the right time. So in fact, if you have the right interfaces with the nervous system, you could do an incredible amount of control that would be hard to imagine doing right now.

IRA FLATOW: Wow. That brings me to a tweet from Annette [? Lange, ?] who is a high school teacher. And she wants to know, how do I prep my students for this field? What programming language?

HILLEL CHIEL: The first thing is that they should learn a lot of math. That’s the first thing I would say. They should also know their humanity as well, because they want to understand a lot about human behavior and social issues. And I think it’s also important to learn biology, physics and chemistry.

And they should as much as possible follow their curiosity, because that’s going to drive them to actually learn what they really need to know as they go forward in the field, because the field’s going to keep moving forward. And what you learn in high school right now is just a foundation for what you’re going to need to be able to teach yourself as you go forward.

IRA FLATOW: So as the field moves forward, what’s the next thing we should be looking for?

HILLEL CHIEL: Well, I think genetic engineering combined with biorobotics may be some of the most exciting stuff. Ritu has spoken a little bit about optogenetics in terms of the muscles. If you can do that in the neurons, there’s a lot that you can do in that case to modify patterns of activity and do some very interesting control.

IRA FLATOW: Ritu, should we be seeing more complex machines out of this?

RITU RAMAN: Yes. So we’re working right now on incorporating different types of cell types and working on the biological aspect of this. But speaking to the other side, I think right now the kinds of synthetic materials that we use are just plastics that don’t really respond in any way. If we could work with more traditional engineers who are working on making smart synthetic materials and combine those with our smart biological materials, I think that’ll be a really great output for the future.

CHARLES THORPE: Here’s how we’ll know if Ritu and Hillel have succeeded. If 20 years from now someone says, we better test the Russian Olympic team to see if they have any biorobotic technology injected into their athletes because of the pioneering work done by these two, we’ll know that their technology has succeeded.

IRA FLATOW: All right, we’ll all meet back here in 20 years.

RITU RAMAN: All right. Sounds good.

IRA FLATOW: Charles Thorpe, vice president and provost at Clarkson University in Potsdam. Hillel Chiel, professor of biology, neuroscience, and biomedical engineering at Case Western Reserve University in Cleveland. Ritu Raman is a mechanical engineer at the University of Illinois at Champaign-Urbana. Thank you all for taking time to be with us today. Charles Bergquist is our director, our senior producer Christopher Intagliata.

Our producers are Alexa Lim, Annie Menoff, Kristi Taylor, and Katie Hiler. Luke Roskin is our video producer. Rich Kim our technical director. Sara Fishman and Jack Horowitz are engineers at the controls here of our production partners, the City University of New York. Have a great, great weekend. I’m Ira Flatow in New York.

Copyright © 2016 Science Friday Initiative. All rights reserved. Science Friday transcripts are produced on a tight deadline by 3Play Media. Fidelity to the original aired/published audio or video file might vary, and text might be updated or amended in the future. For the authoritative record of ScienceFriday’s programming, please visit the original aired/published recording. For terms of use and more information, visit our policies pages at http://www.sciencefriday.com/about/policies.

Christopher Intagliata was Science Friday’s senior producer. He once served as a prop in an optical illusion and speaks passable Ira Flatowese.